An earlier article suggests we should pay attention to gamma, at least when the output is 1-bit deep. How should we dither when we want the output to be more than 1-bit deep. Say, dithering 8-bit input down to 2-bit output?

We need to decide:

– What output codes shall we use?

– How do we choose the each output code?

A general purpose dithering routine can use an arbitrary set of output codes. The number of output codes is determined by the desired bit-depth of the output. If we have a 2-bit output file, then that’s 4 output codes.

The easiest output codes to use are linear ones, because we’re doing the dithering in linear space (we are, right? Well, part of the reason for this blog article is to explore when that is worth doing). For example, a 2-bit output code in linear space can be selected with «int(round(v*3.0))». There’s a problem: this sucks. It sucks for all the same reasons that we use perceptual codes in the first place: we’re pissing away (output) precision on areas of the colour space where humans can’t perceive differences anyway; not enough buck for each bit.

This 8-bit ramp is dithered down to 2-bits and the gamma (of the 2-bit output image) is 1.0:

Black pixels extend from the left almost to the middle, so just 1 of the 3 adjacent pairs of output colours (0 n 1, 1 n 2, 2 n 3) are dithered over 50% of the image area. That doesn’t seem like a good thing. Also, the 2 lightest colours are very close to each other (perceptually), giving the effect that the right-hand end of the ramp looks “blown out” with not enough shading.

So, the output codes ought to be in some sort of perceptual space (mostly); it makes sense to allow the user to specify the output codes. Not just how many output codes (the bit depth), but also the distribution of the output codes, which amounts to selecting the output gamma. Yeah, I guess in general you want to specify some calibrated colour space; gamma is a simplification.

Two curves show the difference between picking output codes in linear space (gamma = 1.0) and in a perceptual space (gamma = 1.8). The (relative) intensity values appear on the right:

Error diffusion dithering (which is what pipdither does) is a general term for a number of algorithms all of which boil down to:

– pick an order to consider the pixels of an image;

– for each pixel:

– from the pixel’s value v, select an output code with value t;

– the error, v-t, is distributed to pixels not yet considered by adding some fraction of the error onto each of a number of pixels;

The differences between most error diffusion dithering algorithms amount to what pixels the error is distributed to and what fraction each pixel receives. I won’t be considering the error diffusion step in detail in this article.

So, how do we choose the output code? Since we are doing the dithering in linear space, we have presumably converted the input image into linear values (greyscale intensity). The easy thing then is to arrange the output codes in the same linear space (in other words, convert all the output codes into linear space), and pick the nearest output code corresponding to the value of each pixel. Actually pipdither allows you to favour higher codes or lower codes by placing the cutoff point between each pair of adjacent output codes some fraction of the way between them; the fraction can be specified (with the -c option in the unlikely event that anyone actually wants to use pipdither), and defaults to 0.75, favouring lower output codes. I may explain why in a later article.

The one problem with this algorithm: the images it produces look almost the same as the naïve algorithm that just ignores all considerations of gamma (pretending that the input and output are linear when in fact both are coded in perceptual space). So our new improved algorithm is just pissing away CPU performance for almost no gain in image quality. Still, at least we can be smug in the knowledge that it Does The Right Thing.

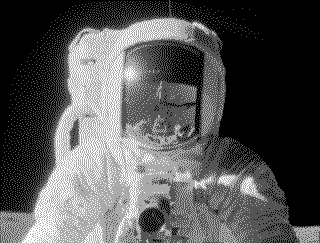

Can we actually tell any difference between my Right Thing gamma-aware algorithm and the naïve algorithm which knows nothing about gamma (and hence just pretends everything is linear)? The middle image (below) is a greyscale gradient with 256 grey values (8-bit). The other two images are dithers down to 2-bit. Can you tell which image is which algorithm?

Here’s a couple of spacemen. I think the difference between the two algorithms is more pronounced:

And I’d just like to point out that because of Apple’s 2-bit PNG bug, all of these “2-bit” PNGs are in fact 8-bit PNGs that just happen to only use 4 codes (I use pipdither -b 8 to increase a PNG image’s bit depth; no dithering takes places in that case).

2009-04-30 at 17:51:53

Blind test here confirms that the top bar looks, slightly, better than the bottom one.

2009-04-30 at 18:05:31

(that is, in Firefox on my random cheap Tosh laptop screen with default everything)

2009-05-22 at 21:52:23

David,

You should honor tradition, and illustrate dithering algorithms with the picture of the November 1972 Playmate.

Out of sheer curiosity, does anybody actually do dithering on image files? I mean, with today’s available storage capacities, is it really worth the lost quality?

I am, by the way, an R&D engineer for HP, and deal with this kind of stuff all the time, but we definitely need to turn 8-bit values into “lay 1, 2, 3 or none drops of ink.” We need not worry about deciding on the gamma thing though: it’s an ink property.

2009-06-01 at 15:30:10

@Jaime: When you display a 16-bit grayscale PNG to an 8-bit display (in other words, a typical display), should you dither?

As to why I don’t use Lenna, I have several reasons:

– I had never heard of the image;

– it’s a colour image and pipdither currently handles only single-channel images;

– it’s copyright;

– it’s sexist.

2009-06-04 at 13:02:32

When you say sexist, I think you mean suggestive. Lenna does not discriminate and I am sure she would be terribly upset at the accusation.

2009-06-04 at 13:58:35

Your speculations as to what I might mean are noted.